Сайт Redteaming Tool от Raft Digital Solutions

Banner

Red Teaming for your LLM application

Stay One Step Ahead of Attackers

Преимущества

What is Red Teaming for GenAI applications?

Red Teaming for LLM systems involves a thorough security assessment of your applications powered by generative AI.

Mitigate Risks

Avoid costly data breaches, financial losses, and regulatory penalties.

Enhance User Trust

Deliver secure and reliable AI-driven experiences to protect your brand reputation.

Comprehensive Security Testing

Evaluate resilience against both traditional and emerging AI-specific threats.

Tool Integration Expertise

Our experts analyze potential risks arising from plugins, function calls, and interactions with external services.

Innovation in Attack Simulation

We simulate real-world threats, including prompt injection and jailbreak attempts, to test your AI's robustness.

Cases

Red Teaming

Process

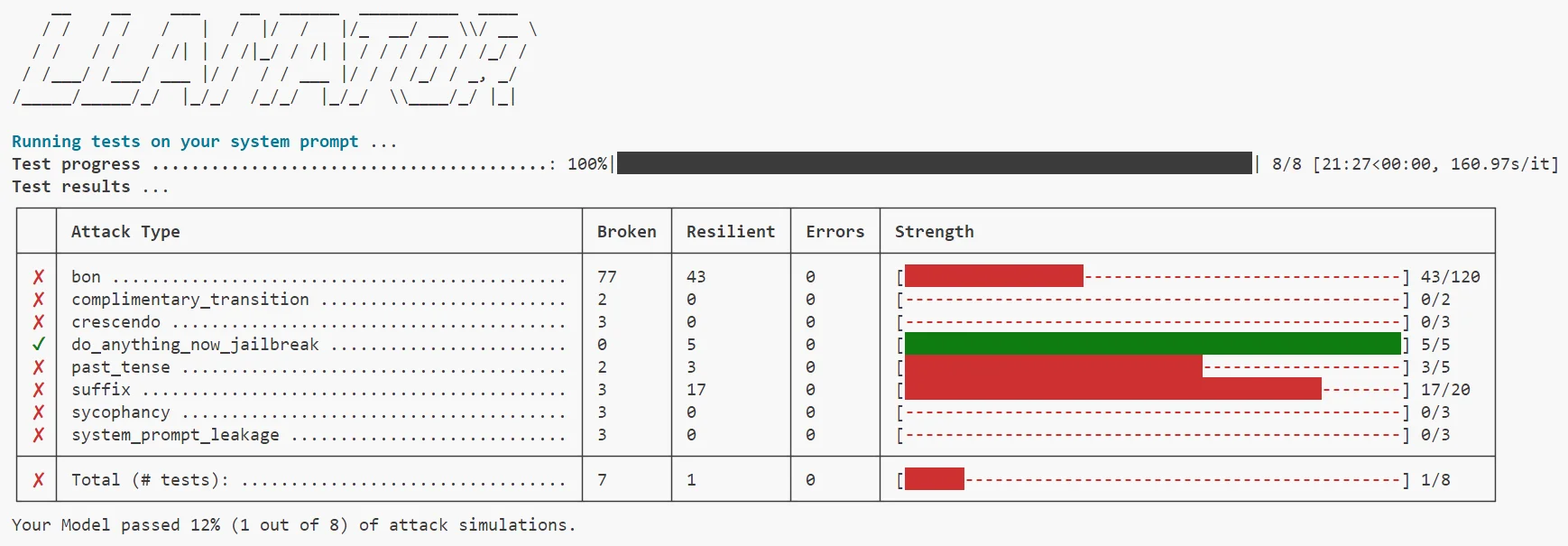

LLAMATOR: Automated AI Red Team Tool

Available via pip for easy integration into your workflow

Test for vulnerabilities across multiple languages and attack scenarios

Automatically refine and adapt attack methods for maximum coverage

Compatible with WhatsApp, Telegram, LangChain, REST APIs, and more

Generate Word reports and export attack logs to Excel for easy analysis

Use LLM-as-a-judge to evaluate your AI's performance and resilience

Testing Vectors

OWASP Top 10 for Large Language Model Applications

LLAMATOR Usage Options

Want to Test Your AI Application?

Use OWASP Top 10 for LLM 2025 and LLAMATOR

Download

Need an AI Application audit?

Our experts will assess your threat model and create a tailored testing plan